|

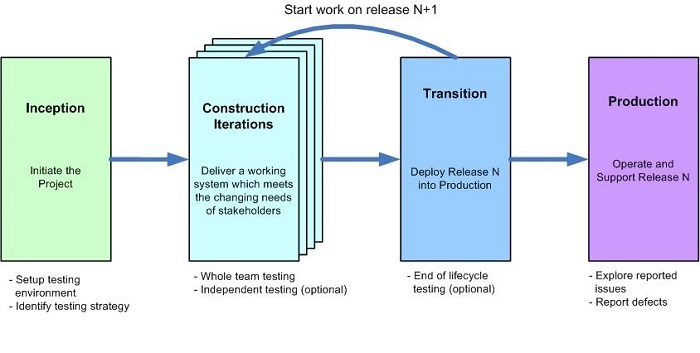

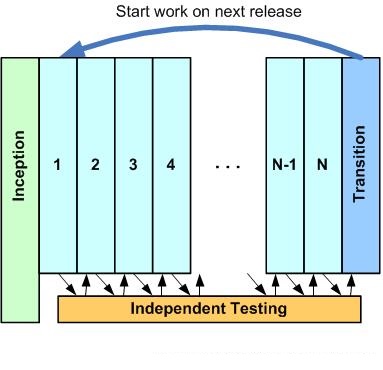

3. Agile Testing Strategi To understand how testing activities fit into agile system development it is useful to look at it from the point of view of the system delivery lifecycle (SDLC). Figure 10 is a high-level view of the agile SDLC, indicating the testing activities at various SDLC phases. This section is organized into the following topics: 从系统交付生命周期角度来看理解怎样的测试活动适应敏捷系统开发是很有用的,Figure10 是敏捷开发软件生命周期的高级视图,包括在生命周期各个阶段的测试活动,这段是按照下面进行组织: Project initiation 项目初始 The whole team 整个团队 The independent test team 独立测试团队 Test environment setup 测试环境搭建 Development team testing 开发团队测试 Continuous integration 持续集成 Test-driven development (TDD) 测试驱动开发 Test-immediately after approach 立刻测试 Parallel independent testing 并行独立测试 Defect management 缺陷管理 End-of-lifecycle testing 生命周期结束测试 Who is doing this? 谁来做 Implications for test practitioners 测试人员的影响

Figure 10. Testing throughout the SDLC. 3.1 Project Initiation During project initiation, often called "Sprint 0" in Scrum or "Iteration 0" in other agile methods, your goal is to get your team going in the right direction. Although the mainstream agile community doesn't like talking about this much, the reality is that this phase can last anywhere from several hours to several weeks depending on the nature of the project and the culture of your organization. From the point of view of testing the main tasks are to organize how you will approach testing and start setting up your testing environment if it doesn't already exist. During this phase of your project you will be doing initialrequirements envisioning (as described earlier) andarchitecture envisioning. As the result of that effort you should gain a better understanding of the scope, whether your project must comply to external regulations such as the Sarbanes-Oxley act or the FDA's CFR 21 Part 11 guidelines, and potentially some some high-level acceptance criteria for your system -- all of this is important information which should help you to decide how much testing you will need to do. It is important to remember that one process size does not fit all, and that different project teams will have different approaches to testing because they find themselves in different situations -- the more complex the situation, the more complex the approach to testing (amongst other things). Teams finding themselves in simple situations may find that a "whole team" approach to testing will be sufficient, whereas teams in more complex situations will also find that they need anindependent test team working in parallel to the development team. Regardless, there's always going to be some effort setting up yourtest environment. 在项目初始(启动),scrum中经常叫做“Spring 0” 或者在其他敏捷方法中叫 “迭代 0” , 你的目标是获得团队的正确方向,尽管主流的敏捷社区不太多的谈论这个,事实上这个阶段可能持续几个小时到几个星期,这依赖于项目性质和你们的组织文化。 站在测试的视角来看主要任务是组织测试方法和开始搭建测试环境 。通过这个阶段,你们将做需求构想和架构构想。 3.1.1 The "Whole Team" Organizational Strategy An organizational strategy common in the agile community, popularized by Kent Beck inExtreme Programming Explained 2nd Ed, is for the team to include the right people so that they have the skills and perspectives required for the team to succeed. To successfully deliver a working system on a regular basis, the team will need to include people with analysis skills, design skills, programming skills, leadership skills, and yes, even people with testing skills. Obviously this isn't a complete list of skills required by the team, nor does it imply that everyone on the team has all of these skills. Furthermore, everyone on an agile team contributes in any way that they can, thereby increasing the overall productivity of the team. This strategy is called "whole team". 在敏捷社区组中普遍的,被推广的组织策略是,敏捷团队里要有合适的人,这样他们的技能和观点能够帮助团队获得成功,能够定期的成功地交付系统。,团队里需要有包括分析技能、设计技能、编程技能,领导能力,甚至测试技能的人。显然这不是一个完整的团队人员能力列表,也不意味着团队中的每个人都有这些技能。此外,每个人都在一个敏捷团队通过任何方式作出他力所能及的贡献,从而提供团队能整理生产力。这种策略叫做“整个团队”。 With a whole team approach testers are “embedded” in the development team and actively participate in all aspects of the project. Agile teams are moving away from the traditional approach where someone has a single specialty that they focus on -- for example Sally just does programming, Sanjiv just does architecture, and John just does testing -- to an approach where people strive to become generalizing specialists with a wider range of skills. So, Sally, Sanjiv, and John will all be willing to be involved with programming, architecture, and testing activities and more importantly will be willing to work together and to learn from one another to become better over time. Sally's strengths may still lie in programming, Sanjiv's in architecture, and John's in testing, but that won't be the only things that they'll do on the agile team. If Sally, Sanjiv, and John are new to agile and are currently only specialists that's ok, by adopting non-solo development practices and working in short feedback cycles they will quickly pick up new skills from their teammates (and transfer their existing skills to their teammates too). 在“整个团队”方法中,测试人员被嵌入到开发团队并且积极参与项目的方方面面的活动。 敏捷团度正远离传统方法中单人单责的工作方法。 例如Slly 仅是一个成员,Sanjiv 只做架构 另外 John 仅作测试。敏捷团队中让每一个人变成具有广泛技能的通才。 那么 Sally,Sanjiv 和john 将一起作开发,架构,测试活动和很多重要工作。从别人那里不断的学习,不断地提高。 Sally 可能擅长在代码上,Sanjiv 擅长在架构上,john擅长在测试上。但是这些不是他们在敏捷团队中仅仅要做的工作,如果Sally Sanjiv 和John在敏捷方面都是新手,目前仅有专家,没关系,通过采用non-solo 开发实践和短周期反馈工作方法,他们将从他们的队友中快速获得新的技术(也转移他们自己的技术给其他队友)。 This approach can be significantly different than what traditional teams are used to. On traditional teams it is common for programmers (specialists) to write code and then "throw it over the wall" to testers (also specialists) who then test it an report suspected defects back to the programmers. Although better than no testing at all, this often proves to be a costly and time consuming strategy due to the hand-offs between the two groups of specialists. On agile teams programmers and testers work side-by-side, and over time the distinction between these two roles blur into the single role of developer. An interesting philosophy in the agile community is that real IT professionals should validate their own work to the best of their ability, and to strive to get better at doing so over time. 这个方法与传统团队用的方法明显不同,在传统团队里通常是程序员写代码,并且通过流水线给测试人员,测试人员执行测试,报告缺陷反馈给程序员,尽管比没有测试好,但是这也证明在两个专门团队间传递的策略是一个昂贵的和费时的方法。 在敏捷团队中程序员和测试人员并肩工作,随着时间的推移,两个角色的差别变得模糊,最后变成只有一个开发角色。 在敏捷开发社区中有一个有趣的哲学,真正的it专业人才最好的能力是炎症自己的工作,并且努力随着时间推移做得更好。 The whole team strategy isn't perfect, and there are several potential problems: 整个团队策略不是完美的,有以下几个潜在的问题: Group think. Whole teams, just like any other type of team, can suffer from what's known as "group think". The basic issue is that because members of the team are working closely together, something that agile teams strive to do, they begin to think alike and as a result will become blind to some problems that they face. For example, the system that your team is working on could have some significant usability problems in it but nobody on the team is aware of them because either nobody on the team had usability skills to begin with or the team decided to mistakenly downplay usability concerns at some point and team members have forgotten that happened. 集体思维:整个团队,就像其他类型的团队,会遇到所谓的集体思维,基本的问题是,因为团队成员密切合作在一起,敏捷团队努力做的事情,他们开始觉得相似,因此会忽视一些他们所面临的问题。例如,您的团队正在做的的系统中可能会有一些重要的可用性问题,但团队中没有人也意识到他们,因为在开始团队里没有人有可用性机能 或者团队决定错误地淡化可用性在某种程度上和团队成员已经忘记担心的事情发生了。 The team may not actually have the skills it needs. It's pretty easy to declare that you're following the "whole team" strategy but not so easy to actually do so sometimes. Some people may overestimate their skills, or underestimate what skills are actually needed, and thereby put the team at risk. For example, a common weakness amongst programmers is database design skills due to lack of training in such skills, over specialization within organizations where "data professionals" focus on data skills and programmers do not, and a cultural impedance mismatch between developers and data professionals which makes it difficult to pick up data skills. Worse yet, database encapsulation strategies such as object-relational mapping frameworks such as Hibernate motivate these "data-challenged" programmers to believe that they don't need to know anything about database design because they've encapsulated access to it. So, the individual team members believe that they have the skills to get the job done yet in fact they do not, and in this case the system will suffer because the team doesn't have the skills to design and use the database appropriately. 团队可能没有所需要的技能: 整个团队策略说起来容易,做起来难。 有些人高估了自己的技能 或者低估了实际需要的技能,这些都带给团队风险。例如 程序员中一个通常的弱点是由于缺少相关培训缺乏数据库设计技巧,在专业的组织里有数据专家关注数据技能 ,程序员是不作这些工作的。程序员和数据专家之间的文化障碍使得程序员很难去捡起这项技能。 更糟糕的,数据据库封装策略 就像关系对象Map 框架 ,就像hibernate框架技术使得这些程序员相信他们不需要知道关于数据库设计的任何事情,因为他们已经被封装好了。 所以 独立的团队程砚详细他们有技能去完成这个工作,但事实上他们做不了。 在这个案例中因为团队没有设计和使用数据库的技术而困苦。 The team may not know what skills are needed. Even worse, the team may be oblivious to what skills are actually needed. For example, the system could have some very significant security requirements but if the team didn't know that security was likely to be a concern then they could miss those requirements completely, or misunderstand them, and inadvertently put the project at risk. 团队可能不知道那些技能被需要,更糟糕的是 ,团队可能不知道实际中需要什么技术,例如一个系统有一些非常重大的安全需求,但是如果团队不知道安全可能是一个问题,而忽略了那些需求或者错误理解,从而导致项目风险。 Luckily the benefits of the whole team approach tend to far outweigh the potential problems. First, whole team appears to increase overall productivity by reducing and often eliminating the wait time between programming and testing inherent in traditional approaches. Second, there is less need for paperwork such as detailed test plans due to the lack of hand-offs between separate teams. Third, programmers quickly start to learn testing and quality skills from the testers and as a result do better work to begin with -- when the developer knows that they'll be actively involved with the testing effort they are more motivated to write high-quality, testable code to begin with. 幸运的是整个团队的方法的好处往往远远大于潜在的问题。首先,整个团队策略通过减少和消除编程和测试的等待时间从而增加了整体生产力。第二,由于不存在独立的团队之间的交接,从而减少文档工作 例如详细测试计划。第三,程序员从测试人员那里迅速开始学习测试和质量技能,结果就是-当开发人员知道他们会积极参与测试工作更积极写高质量、可测试的代码。 3.1.2 The Independent Test Team (Advanced Strategy) 独立的测试团队(高级策略) The whole team approach works well in practice when agile development teams find themselves in reasonably straightforward situations. However, when the environment is complex, either because the problem domain itself is inherently complex, the system is large (often the result of supporting a complex domain), or it needs to integrate into your overall infrastructure which includes a myriad of other systems, then a whole team approach to testing proves insufficient. Teams in complex environments, as well as teams which find themselves in regulatory compliance situations, will often discover that they need to supplement their whole team with an independent test team. This test team will performparallel independent testing throughout the project and will typically be responsible for theend-of-lifecycle testing performed during therelease/transition phase of the project. The goal of these efforts is to find out where the system breaks (whole team testing often focuses on confirmatory testing which shows that the system works) and report such breakages to the development team so that they can fix them. This independent test team will focus on more complex forms of testing which are typically beyond the ability of the "whole team" to perform on their own, more on this later.

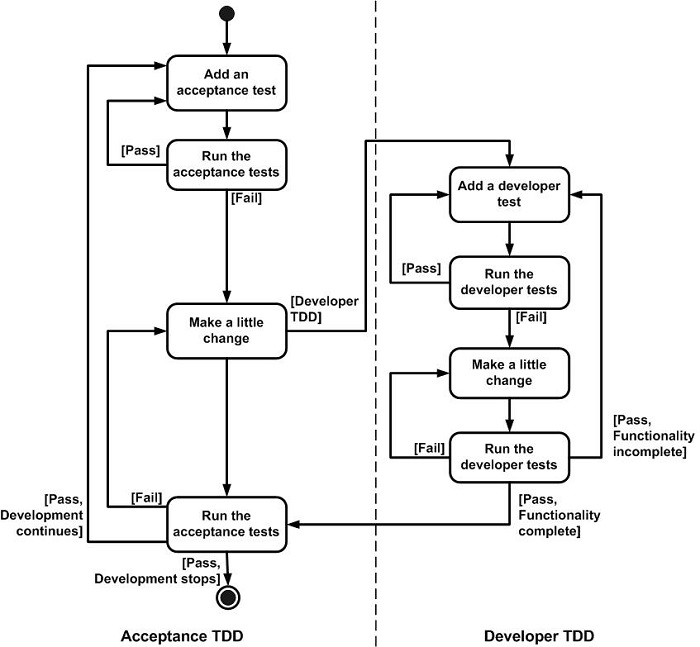

Your independent test team will support multiple project teams. Most organizations have many development teams working in parallel, often dozens of teams and sometimes even hundreds, so you can achieve economies of scale by having an independent test team support many development teams. This allows you to minimize the number of testing tool licenses that you need, share expensive hardware environments, and enable testing specialists (such people experienced in usability testing or investigative testing) to support many teams. It's important to note that an agile independent test team works significantly differently than a traditional independent test team. The agile independent test team focuses on a small minority of the testing effort, the hardest part of it, while the development team does the majority of the testing grunt work. With a traditional approach the test team would often do both the grunt work as well as the complex forms of testing. To put this in perspective, the ratio of people on agile developer teams to people on the agile independent test team will often be 15:1 or 20:1 whereas in the traditional world these ratios are often closer to 3:1 or 1:1 (and in regulatory environments may be 1:2 or more). 完整团队方法在简单的场景的敏捷开发团队中运作的比较好。然而,当环境复杂了, 3.1.3 Testing Environment Setup At the beginning of your project you will need to start setting up your environment, including setting up your work area, your hardware, and your development tools (to name a few things). You will naturally need to set up your testing environment, from scratch if you don't currently have such an environment available or through tailoring an existing environment to meeting your needs. There are several strategies which I typically suggest when it comes to organizing your testing environment: 在项目开始时你需要开始搭建环境,包括你的工作区域,硬件,开发工具,你将自然的需要去搭建你的测试环境。 如果当前没有环境需要重头搭建或者通过调整现有环境来满足你的需求。 在组织测试环境时有如下几个策略: Adopt open source software (OSS) tools for developers. There are a lot of great OSS testing tools available, such as thexUnit testing framework, which are targeted at developers. These tools are often easy to install and to learn how to use (although learning how to test effectively proves to be another matter). 采用开源工具给开发者 Adopt commercial tools for independent testers. Because your independent test team will often address more complex testing issues, such as integration testing across multiple systems (not just the single system that the development team is focused on), security testing, usability testing, and so on they will need testing tools which are sophisticated enough to address these issues. 采用商业工具给独立的测试人员 Have a shared bug/defect tracking system. As you see inFigure 2, and as I've mentioned in the previous section, the independent test team will send defect reports back to the development team, who in turn will often consider thesedefect reports to be just another type of requirement. The implication is that they need to have tooling support to do this. When the team is small and co-located, as we see atAgile Process Maturity Model (APMM) levels 1 and 2, this could conceivably be as simple as a stack of index cards. In more complex environments you'll need a software-based tool, ideally one that is used to manage both the team's requirements as well as the team's defects (or more importantly, their entire work item list). More on this later. 有一个可以跟踪bug和缺陷的系统 Invest in testing hardware. Both the development team and the independent team will need hardware on which to do testing. 在测试硬件上投资 Invest in virtualization and test lab management tools. Test hardware is expensive and there's never enough. Virtualization software which enables you to load a testing environment onto your hardware easily, as well astest-lab management tools which enable you to track hardware configurations, are critical to the success of your independent test team (particularly if they're support multiple development teams). 在虚拟化和测试实验室管理工具上投资 Invest in continuous integration (CI) and continuous deployment (CD) tools. Not explicitly a category of testing tool. but CI/CD tools are critical for agile development teams because of practices such astest-driven development (TDD), developer regression testing in general, and the need to deploy working builds into independent testing environments, demo environments, or even production. Open source tools such asMaven and CruiseControl work well in straightforward situations, although in more complex situations (particularly at scale), you'll find that you needcommercial CI/CD tools. 在持续集成和持续开发工具上投资 3.2 Development Team Testing Strategies Agile development teams generally follow a whole team strategy where people with testing skills are effectively embedded into the development team and the team is responsible for the majority of the testing. This strategy works well for the majority of situations but when your environment is more complex you'll find that you also need anindependent test team working in parallel to the development and potentially performingend-of-lifecycle testing as well. Regardless of the situation, agile development teams will adopt practices such as continuous integration which enables them to do continuous regression testing, either with atest-driven development (TDD) ortest-immediately after approach. 敏捷开发团队通常遵循整个团队策略,开发团队要有测试技术并且团队是有责任承担主要测试工作。 这种策略适用大多数环境,但是在复杂环境中需要独立的测试团队来执行生命周期后面的测试工作。不论什么场景,敏捷开发团队需要采用这样的实践,例如持续集成能够是他们快速的持续回归测试,无论是在测试驱动开发或是方法后立即测试。 3.2.1 Continuous Integration (CI) Continuous integration (CI) is a practice where at least once every few hours, preferably more often, you should: 持续集成是一个实践,至少在几个小时执行一次,最好是经常做下面工作: Build your system. This includes both compiling your code and potentially rebuilding your test database(s). This sounds straightforward, but for large systems which are composed of subsystems you need a strategy for how you're going to build both the subsystems as well as the overall system (you might only do a complete system build once a night, or once a week for example, and only build subsystems on a regular basis) 构建你的系统 Run your regression test suite(s). When the build is successful the next step is to run your regression test suite(s). Which suite(s) you run will be determined by the scope of the build and the size of the system. For small systems you'll likely have a single test suite of all tests, but in more complex situations you will have several test suites for performance reasons. For example, if I am doing a build on my own workstation then I will likely only run a subset of the tests which validate the functionality that the team has been working on lately (say for the past several iterations) as that functionality is most likely to break and may even run tests against "mock objects". Because I am likely to run this test suite several times a day it needs to run fast, preferably in less than 5 minutes although some people will allow their developer test suite to go up to ten minutes. Mock objects might be used to simulate portions of the system which are expensive to test, from a performance point of view, such as a database or an external subsystem. If you're using mocks, or if you're testing a portion of the functionality, then you'll need one or more test suites of greater scope. Minimally you'll need a test suite which runs against the real system components, not mocks, and that runs all your tests. If this test suite runs within a few hours then it can be run nightly, if it takes longer then you'll want to reorganize it again so that very long-running tests are in another test suite which runs irregularly. For example I know of one system which has a long-running test suite which runs for several months at a time in order to run complex load and availability tests (something that anindependent test team is likely to do). 执行回归测试 Perform static analysis. Static analysis is an quality technique where an automated tool checks for defects in the code, often looking for types of problems such as security defects or coding style issues (to name a few). 执行静态分析 Your integration job could run at specific times, perhaps once an hour, or every time that someone checks in a new version of a component (such as source code) which is part of the build. 你的集成工作在特定实践运行,假定一个小时一次,每次都有些人提交了新的版本作为构建的一部分 Advanced teams, particularly those in an agility at scale situation, will find that they also need to consider continuous deployment as well. The basic idea is that you automate the deployment of your working build, some organizations refer to this as promotion of their working build, into other environments on a regular basis. For example, if you have a successful build at the end of the week you might want to automatically deploy it to a staging area so that it can be picked up forparallel independent testing. Or if there's a working build at the end of the day you might want to deploy it to a demo environment so that people outside of your team can see the progress that your team is making. 高级的团队,尤其那些有 3.2.2 Test-Driven Development (TDD) Test-driven development (TDD) is an agile development technique practice which combines: 测试驱动开发是一个敏捷开发技术实践: Refactoring. Refactoring is a technique where you make a small change to your existing source code or source data to improve its design without changing its semantics. Examples of refactorings include moving an operation up the inheritance hierarchy and renaming an operation in application source code; aligning fields and applying a consistent font on user interfaces; and renaming a column or splitting a table in a relational database. When you first decide to work on a task you look at the existing source and ask if it is the best design possible to allow you to add the new functionality that you're working on. If it is, then proceed with TFD. If not, then invest the time to fix just the portions of the code and data so that it is the best design possible so as to improve the quality and thereby reduce your technical debt over time. 重构:重构是一个技术,他通过对存在的代码或源数据调整来改善设计,但不改变原来功能。 重构的例子包括在应用程序源代码移动操作继承层次结构和重命名操作。 再用户界面调整字段和应用一致的字体; 在关系数据库中修改列名或表名。 在你决定沟沟一个存在的代码是需要考虑它是不是最好的设计,是否能允许你增加新的功能。 如果他是,则继续测试先行开发,如果不是需要投入一些时间去修改代码和数据以至于它成为最好的设计,改进质量和减少技术债务。 Test-first development (TFD). With TFD you write a single test and then you write just enough software to fulfill that test. The steps of test first development (TFD) are overviewed in the UML activity diagram of Figure 11. The first step is to quickly add a test, basically just enough code to fail. Next you run your tests, often the complete test suite although for sake of speed you may decide to run only a subset, to ensure that the new test does in fact fail. You then update your functional code to make it pass the new tests. The fourth step is to run your tests again. If they fail you need to update your functional code and retest. Once the tests pass the next step is to start over. 测试先行开发。 在测试先行开发中,你写一个测试 然后编写满足测试要求的软件(就是说现根据需求编写一个测试代码,在编写需求实现代码直到测试代码通过)。在Figure 11 UML活动图中描述了TFD的步骤。 第一步就是快速添加一个测试,基于现有代码执行失败, 下一步 你执行你的测试,经常由于速度原因一个完整的测试集中,你只运行一个子集。 去确认新的测试在实际中是失败的。然后你更新你的功能代码直到他通过测试。 ,第四步,在次运行测试,如果失败了,你需要修改你的功能代码并重新测试,一旦在下一步中测试通过了,则回到开始。

Figure11. The Steps of test-first development (TFD). There are two levels of TDD: TDD有两个级别: Acceptance TDD. You can do TDD at the requirements level by writing a single customer test, the equivalent of a function test or acceptance test in the traditional world. Acceptance TDD is often called behavior-driven development (BDD) or story test-driven development, where you first automate a failing story test, then driving the design via TDD until the story test passes (a story test is a customer acceptance test). 验收TDD , 你可能通过编写单个客户测试在需求级别做TDD,相当于功能测试或者是传统的验收测试。验收TDD 经常被叫做行为驱动开发(BDD),或者故事测试驱动开发,你先编写一个故事自动化测试,第一次运行失败,然后驱动设计通过TDD直到故事测试通过(一个故事测试是一个客户验收测试) ---注:故事翻译怪怪的,叫用户场景测试可能好理解些。 Developer TDD. You can also do TDD at the design level with developer tests. 开发TDD,你也能够在设计层面作TDD ,开发者测试。 With a test-driven development (TDD) approach your tests effectively become detailed specifications which are created on a just-in-time (JIT) basis. Like it or not most programmers don’t read the written documentation for a system, instead they prefer to work with the code. And there’s nothing wrong with this. When trying to understand a class or operation most programmers will first look for sample code that already invokes it. Well-written unit/developers tests do exactly this – they provide a working specification of your functional code – and as a result unit tests effectively become a significant portion of your technical documentation. Similarly,acceptance tests can form an important part of your requirements documentation. This makes a lot of sense when you stop and think about it. Your acceptance tests define exactly what your stakeholders expect of your system, therefore they specify your critical requirements. The implication is that acceptance TDD is a JIT detailed requirements specification technique and developer TDD is a JIT detailed design specification technique. Writing executable specifications is one of the best practices of Agile Modeling. 在一个测试驱动开发方法中,你的测试效果变成了详细设计说明书。 大多数程序员不读系统文档,相反他们更喜欢读代码。 这并没有什么错误。 当试图理解一个类或操作时,大多数程序员首先会查找调用它的代码。编写良好的单元测试(或叫开发人员测试)正好做到了——提供工作规范的功能代码-,单元测试结果成为你的技术文档。同样 验收测试成为你的需求文档的重要部分。 当你停下来思考这是很有道理的。你的验收测试定义的正是涉众对系统的期望,因此他们指定了你界定的需求。意味着验收TDD是一个及时的需求说明书技术,开发TDD是一个及时的详细设计技术。编写一个可执行的需求是敏捷模型中的最佳实践。

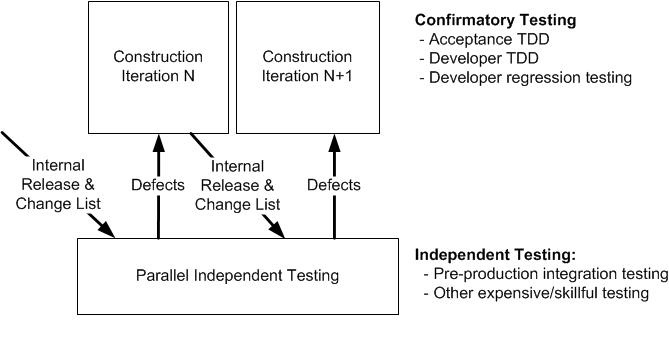

Figure 12. How ATDD and developer TDD fit together. With ATDD you are not required to also take a developer TDD approach to implementing the production code although the vast majority of teams doing ATDD also do developer TDD. As you see inFigure 12, when you combine ATDD and developer TDD the creation of a single acceptance test in turn requires you to iterate several times through the write a test, write production code, get it working cycle at the developer TDD level. Clearly to make TDD work you need to have one or more testingframeworks available to you. For acceptance TDD people will use tools such asFitnesse orRSpec and for developer TDD agilesoftware developers often use the xUnit family of open source tools, such as JUnitorVBUnit. Commercial testing tools arealso viable options. Without suchtools TDD is virtually impossible. The greatest challenge with adopting ATDD is lack of skills amongst existing requirements practitioners, yet another reason to promotegeneralizing specialists within your organization over narrowly focused specialists. 3.2.3 Test-Immediately After Approach Although many agilists talk about TDD, the reality is that there seems to be far more doing "test after" development where they write some code and then write one or more tests to validate. TDD requires significant discipline, in fact it requires a level of discipline found in few coders, particularly coders which follow solo approaches to development instead ofnon-solo approaches such as pair programming. Without a pair keeping you honest, it's pretty easy to fall back into the habit of writing production code before writing testing code. If you write the tests very soon after you write the production code, in other words "test immediately after", it's pretty much as good as TDD, the problem occurs when you write the tests days or weeks later if at all. The popularity of code coverage tools such asClover andJester amongst agile programmers is a clear sign that many of them really are taking a "test after" approach. These tools warn you when you've written code that doesn't have coverage tests, prodding you to write the tests that you would hopefully have written first via TDD. 3.3 Parallel Independent Testing The whole team approach to development where agile teams test to the best of the ability is a great start, but it isn't sufficient in some situations. In these situations, described below, you need to consider instituting aparallel independent test team which performs some of the more difficult (or perhaps advanced is a better term) forms of testing. As you can see inFigure 13, the basic idea is that on a regular basis the development team makes their working build available to the independent test team, or perhaps they automatically deploy it via theircontinuous integration tools, so that they can test it. The goal of this testing effort is not to redo the confirmatory testing which is already being done by the development team, but instead to identify the defects which have fallen through the cracks. The implication is that this independent test team does not need adetailed requirements speculation, although they may need architecture diagrams, a scope overview, and a list of changes since the last time the development team sent them a build. Instead of testing against the specification, the independent testing effort will focus on production-level system integration testing, investigative testing, and formal usability testing to name a few things.

Figure 13. Parallel independent testing. Important: The development team is still doing the majority of the testing when an independent test team exists. It is just that the independent test team is doing forms of testing that either the development doesn't (yet) have the skills to perform or is too expensive for them to perform. The independent test team reports defects back to the development, indicated as "change stories" inFigure 12 because being agile we tend to rename everything ;-) . These defects are treated as type of requirement by the development team in that they're prioritized, estimated, and put on the work item stack. There are several reason why you should consider parallel independent testing: Investigative testing. Confirmatory testing approaches, such as TDD, validate that you've implemented the requirements as they've been described to you. But what happens when requirements are missing? User stories, a favorite requirements elicitation technique within the agile community, are a great way to explore functional requirements but defects surrounding non-functional requirements such as security, usability, and performance have a tendency to be missed via this approach. Lack of resources. Furthermore, many development teams may not have the resources required to perform effective system integration testing, resources which from an economic point of view must be shared across multiple teams. The implication is that you will find that you need an independent test team working in parallel to the development team(s) which addresses these sorts of issues. System integration tests often require expensive environment that goes beyond what an individual project team will have Large or distributed teams. Large or distributed teams are often subdivided into smaller teams, and when this happens system integration testing of the overall system can become complex enough that a separate team should consider taking it on. In short, whole team testing works well for agile in the small, but for more complex systems andagile at scale you need to be more sophisticated. Complex domains. When you have a very complex domain, perhaps you're working on life critical software or on financial derivative processing, whole team testing approaches can prove insufficient. Having a parallel independent testing effort can reduce these risks. Complex technical environments. When you're working with multiple technologies, legacy systems, or legacy data sources the testing of your system can become very difficult. Regulatory compliance. Some regulations require you to have an independent testing effort. My experience is that the most efficient way to do so is to have it work in parallel to the development team. Production readiness testing. The system that you're building must "play well" with the other systems currently in production when your system is released. To do this properly you must test against versions of other systems which are currently under development, implying that you need access to updated versions on a regular basis. This is fairly straightforward in small organizations, but if your organization has dozens, if not hundreds of IT projects underway it becomes overwhelming for individual development teams to gain such access. A more efficient approach is to have an independent test team be responsible for such enterprise-level system integration testing. Some agilists will claim that you don't need parallel independent testing, and in simple situations this is clearly true. The good news is that it's incredibly easy to determine whether or not your independent testing effort is providing value: simply compare the likely impact of the defects/change stories being reported with the cost of doing the independent testing. 3.4 Defect Management Defect management is often much simpler on agile projects when compared to classical/traditional projects for two reasons. First, with awhole team approach to testing when a defect is found it's typically fixed on the spot, often by the person(s) who injected it in the first place. In this case the entire defect management process is at most a conversation between a few people. Second, when anindependent test team is working in parallel with the development team to validate their work they typically use a defect reporting tool, such as IBM Rational ClearQuest or Bugzilla, to inform the development team of what they found. Disciplined agile delivery teams combine theirrequirements management and defect management strategies to simplify their overall change management process. Figure 14 summarizes this (yes, it's the same as Figure 6) showing how work items are worked on in priority order. Both requirements and defect reports are types of work items and are treated equally -- they're estimated, prioritized, and put on the work item stack.

Figure 14. Agile defect change management process. This works because defects are just another type of requirement. Defect X can be reworded into the requirement "please fix X". Requirements are also a type of defect, in effect a requirement is simply missing functionality. In fact, some agile teams will even capture requirements using a defect management tool, for example theEclipse development team uses Bugzilla and theJazz development team usesRational Team Concert (RTC). The primary impediment to adopting this strategy, that of treating requirements and defects as the same thing, occurs when the agile delivery team finds itself in a fixed-price or fixed estimate situation. In such situations the customer typically needs to pay for new requirements that weren't agreed to at the beginning of the project but should not pay for fixing defects. In such situations the bureaucratic approach would be to have two separate change management processes and the pragmatic approach would be to simply mark the work item as something that needs to be paid extra for (or not). Naturally I favor the pragmatic approach. If you find yourself in a fixed-price situation you might be interested that I've written a fair bit about this and more importantly alternatives forfunding agile projects. To be blunt, I vacillate between consideringfixed-price strategies are unethical or simply a sign of grotesque incompetence on the part of the organization insisting on it. Merging your requirements and defect management processes into a single, simple change management process is a wonderful opportunity for process improvement. Exceptionally questionable project funding strategies shouldn't prevent you from taking advantage of this strategy. 3.5 End of Lifecycle Testing An important part of the release effort for many agile teams is end-of-lifecycle testing where an independent test team validates that the system is ready to go into production. If the independent parallel testing practice has been adopted then end-of-lifecycle testing can be very short as the issues have already been substantially covered. As you see inFigure 15 the independent testing efforts stretch into therelease phase(called the Transition phase ofDisciplined Agile Delivery) of the delivery life cycle because the independent test team will still need to test the complete system once it's available.

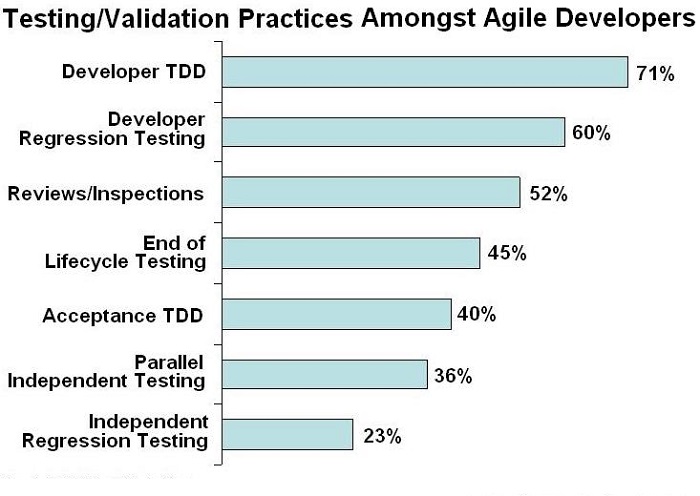

Figure 15. Independent testing throughout the lifecycle. There are several reasons why you still need to do end-of-lifecycle testing: It's professional to do so. You'll minimally want to do one last run of all of your regression tests in order to be in a position to officially declare that your system is fully tested. This clearly would occur once iteration N, the last construction iteration, finishes (or would be the last thing you do in iteration N, don't split hairs over stuff like that). You may be legally obligated to do so. This is either because of the contract that you have with the business customer or due to regulatory compliance (many agile teams find themselves in such situations, as theNovember 2009 State of the IT Union survey discovered). Your stakeholders require it. Your stakeholders, particularly your operations department, will likely require some sort of testing effort before releasing your solution into production in order to feel comfortable with the quality of your work. There is little publicly discussed in the mainstream agile community about end-of-lifecycle testing, the assumption of many people following mainstream agile methods such as Scrum is that techniques such asTDD are sufficient. This might be because much of the mainstream agile literature focuses on small, co-located agile development teams working on fairly straightforward systems. But, when one or morescaling factors (such as large team size, geographically distributed teams, regulatory compliance, or complex domains) are applicable then you need more sophisticated testing strategies. Regardless of some of the rhetoric you may have heard in public, as we see in the next section a fair number of TDD practitioners are indicating otherwise in private. 3.6 Who is Doing This? Figure 16 summarizes the results of one of the questions fromAmbysoft’s 2008 Test Driven Development (TDD) Survey which asked the TDD community which testing techniques they were using in practice. Because this survey was sent to the TDD community it doesn't accurately represent the adoption rate of TDD at all, but what is interesting is the fact that respondents clearly indicated that they weren't only doing TDD (nor was everyone doing TDD, surprisingly). Many were also doingreviews and inspections,end of lifecycle testing, andparallel independent testing, activities which the agile purists rarely seem to discuss.

Figure 16. Testing/Validation practices on agile teams. Furthermore,Figure 17, which summarizes results from the2010 How Agile Are You? survey, provides insight into which validation strategies are being followed by the teams claiming to be agile. I suspect that the adoption rates reported for developer TDD and acceptance TDD, 53% and 44% respectively, are much more realistic than those reported inFigure 16.

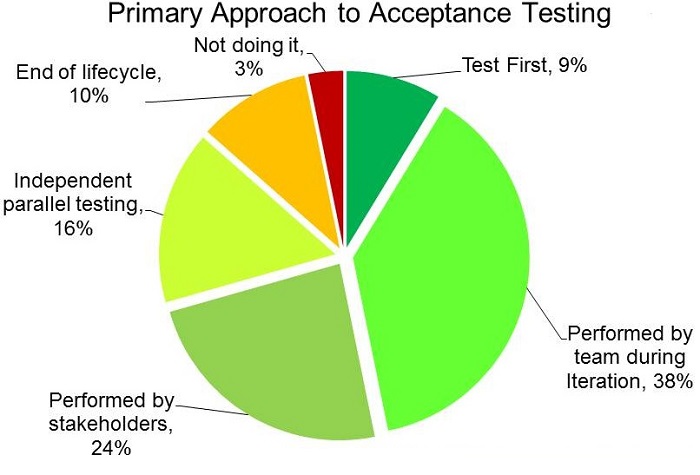

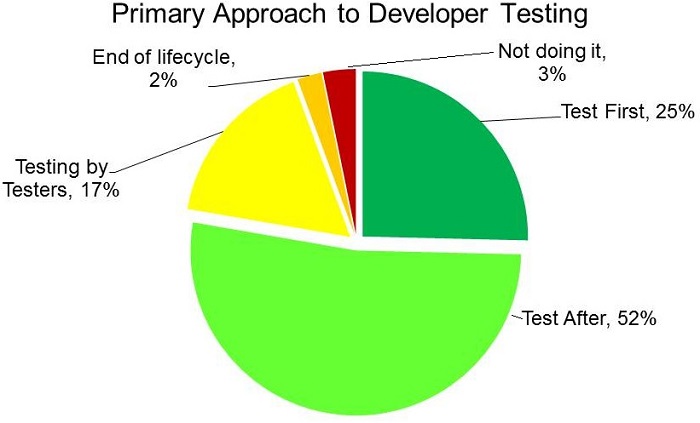

Figure 17. How agile teams validate their own work. Figure 18 andFigure 19 summarize results from theAgile Testing Survey 2012. These charts indicate the PRIMARY approach to acceptance testing and developer testing respectively. On the surface there are discrepancies between the results shown inFigure 17 and those inFigure 18 andFigure 19. For example,Figure 17 shows an adoption rate of 44% for ATDD but Figure 18 only shows a 9% rate. This is because the questions where different. The 2010 survey asked if a team was following the practice whereas the 2012 survey asked if it was the primary approach. So, a team may be taking a test-first approach to acceptance testing but other approaches may be more common, hence ATTD wasn't the primary strategy for acceptance testing on that team. When it comes to test-first approaches it's clear that we still have a long way to go until they dominate.

Figure 18. Primary approach to acceptance testing.

Figure 19. Primary approach to developer testing. 3.7 Implications for Test Practitioners There are several critical implications for existing test professionals: Become generalizing specialists. The implication ofwhole team testing is that most existing test professionals will need to be prepared to do more than just testing if they want to be involved with agile projects. Yes, the people onindependent test teams would still focus solely on testing, but the need for people in this role is much less than the need for people with testing skills to be active members of agile delivery teams. Be flexible. Agile teams take an iterative and collaborative approach which embraces changing requirements. The implication is that gone are the days of having adetailed requirements speculation to work from, now anyone involved with testing must be flexible enough to test throughout the entire life cycle even when the requirements are changing. Be prepared to work closely with developers. The majority of the testing effort is performed by the agile delivery team itself, not by independent testers. Be flexible. This is worth repeating. ;-) Focus on value-added activities. I've lost track of the number of times I've heard test professionals lament that there's never enough time nor resources allocated to testing efforts. Yet, when I explore what these people want to do, I find that they want to wait to have detailed requirements speculations available to them, they want to develop documents describing their test strategies, they want to write detailed test plans, they want to write detailed defect reports, and yes, they even want to write and then run tests. No wonder they don't have enough time to get all this work done! The true value added activities which testers provide are finding and then communicating potential defects to the people responsible for fixing them. To do this they clearly need to create and run tests, all the other activities that I listed previously are ancillary at best to this effort. Waiting for requirements speculations isn't testing. Writing test strategies and plans aren't testing. Writing defect reports might be of value, but there are better ways to communicate information than writing documentation. Agile strategies focus on the value-added activities and minimize if not eliminate the bureaucratic waste which is systemic in many organizations following classical/traditional strategies. Be flexible. This is really important.

|  /1

/1

关于我们

关于我们